A deep dive into the hidden suppression techniques recently exposed by Jemima Khan’s data-backed plea

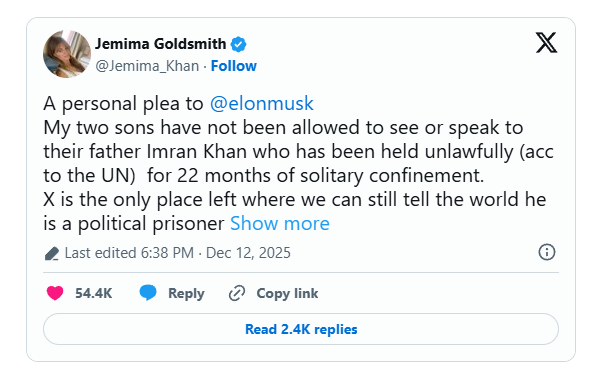

The concept of shadow banning has moved from internet conspiracy theories to headline news following Jemima Khan’s recent allegations against X. Simply put, this practice involves blocking a user’s content from the wider community without their knowledge. Unlike a suspension, the user can still post, comment, and like. However, their visibility is drastically reduced. In Jemima Khan’s specific case, she provided data showing a 97 per cent drop in her account’s reach. This suggests that the platform’s algorithm may be deliberately hiding her posts to stifle specific political narratives.

Also Read: Australia Enforces Strict Social Media Ban for Under-16s

The Mechanics of Invisibility

Technically, shadow banning operates through “visibility filtering”. Algorithms scan posts for specific keywords, hashtags, or user behaviours that flag “safety” concerns. Once flagged, the system removes the content from search results and “For You” feeds. Therefore, the content only appears to people who visit the user’s profile directly. Jemima Khan used Musk’s own AI tool, Grok, to diagnose this. The AI reportedly confirmed that her posts about her ex-husband, Imran Khan, were being throttled. This indicates that the suppression was content-specific rather than a general penalty on her account.

Political Implications and “Freedom of Reach”

This incident highlights the grey area between moderation and censorship. Elon Musk has previously described his policy as “freedom of speech, not freedom of reach”. This philosophy effectively legitimises shadow banning as a tool to de-amplify “negative” content without removing it. However, critics argue this is dangerous when applied to political dissent. If algorithms quietly hide updates about political prisoners like Imran Khan, they effectively shape public opinion without transparency. Consequently, users are left shouting into a void, believing their message is being heard when it is actually silenced.

The Challenge of Detection

Historically, proving that shadow banning is happening has been nearly impossible for the average user. Platforms often deny the practice exists, blaming “glitches” or natural algorithm shifts. Yet, Jemima Khan’s case introduces a new variable: AI auditing. By using Grok to analyse her impression data, she bypassed the usual corporate denials. If more users begin using AI tools to audit their own social media performance, platforms might be forced to disclose exactly when and why they restrict reach. This could lead to a demand for “algorithm transparency laws” globally.

The Hinge Point

The defining irony of this shadow banning controversy is the role of commercial conflict. Social media platforms are now businesses that sell “reach” as a product. By artificially lowering the organic reach of high-profile accounts, platforms implicitly encourage users to pay for verification or “boosts” to regain their audience. Therefore, what looks like political censorship might also be a brutal monetization strategy. Jemima Khan’s case proves that organic virality is no longer guaranteed, even for celebrities. It suggests that the era of the “public town square” is over, replaced by a “pay-to-speak” model.